Explain the structure of cache and operations on cache?

Solution

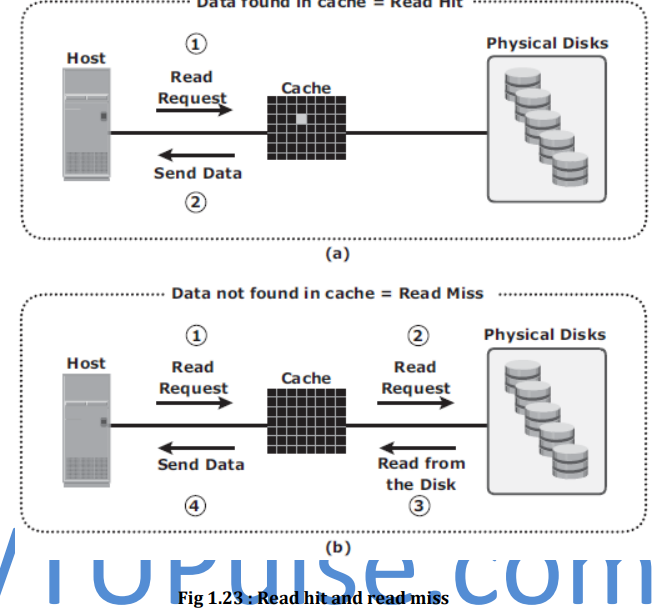

Structure Of Cache -

➢ Cache is organized into pages, which is the smallest unit of cache allocation. The size of a

cache page is configured according to the application I/O size.

➢ Cache consists of the data store and tag RAM.

➢ The data store holds the data whereas the tag RAM tracks the location of the data in the

data store (see Fig 1.22) and in the disk.

➢ Entries in tag RAM indicate where data is found in cache and where the data belongs on

the disk.

➢ Tag RAM includes a dirty bit flag, which indicates whether the data in cache has been

committed to the disk.

➢ It also contains time-based information, such as the time of last access, which is used to

identify cached information that has not been accessed for a long period and may be freed

up.

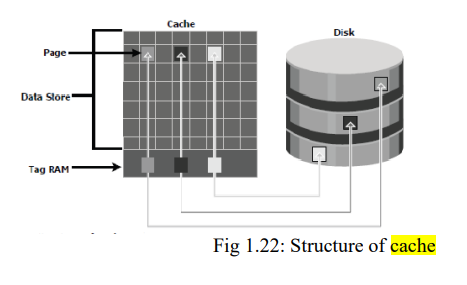

Read Operation with Cache

➢ When a host issues a read request, the storage controller reads the tag RAM to determine

whether the required data is available in cache.

➢ If the requested data is found in the cache, it is called a read cache hit or read hit and

data is sent directly to the host, without any disk operation (see Fig 1.23[a]).This provides

a fast response time to the host (about a millisecond).

➢ If the requested data is not found in cache, it is called a cache miss and the data must be read from the disk. The backend controller accesses the appropriate disks and retrieves the requested data. Data is then placed in cache and is finally sent to the host thorugh the front end controller.

➢ Cache misses increase I/O response time.

➢ A Pre-fetch, or Read-ahead, algorithm is used when read requests are sequential. In a

sequential read request, a contiguous set of associated blocks is retrieved. Several other

blocks that have not yet been requested by the host can be read from the disk and placed

into cache in advance. When the host subsequently requests these blocks, the read

operations will be read hits.

➢ This process significantly improves the response time experienced by the host.

➢ The intelligent storage system offers fixed and variable prefetch sizes.

➢ In fixed pre-fetch, the intelligent storage system pre-fetches a fixed amount of data. It is

most suitable when I/O sizes are uniform.

➢ In variable pre-fetch, the storage system pre-fetches an amount of data in multiples of the size

of the host request.

Write Operation with Cache -

➢ Write operations with cache provide performance advantages over writing directly to

disks.

➢ When an I/O is written to cache and acknowledged, it is completed in far less time (from

the host’s perspective) than it would take to write directly to disk.

➢ Sequential writes also offer opportunities for optimization because many smaller writes

can be coalesced for larger transfers to disk drives with the use of cache.

➢ A write operation with cache is implemented in the following ways:

➢ Write-back cache: Data is placed in cache and an acknowledgment is sent to the host

immediately. Later, data from several writes are committed to the disk. Write response

times are much faster, as the write operations are isolated from the mechanical delays of

the disk. However, uncommitted data is at risk of loss in the event of cache failures.

➢ Write-through cache: Data is placed in the cache and immediately written to the disk,

and an acknowledgment is sent to the host. Because data is committed to disk as it arrives,

the risks of data loss are low but write response time is longer because of the disk

operations.

➢ Cache can be bypassed under certain conditions, such as large size write I/O.

➢ In this implementation, if the size of an I/O request exceeds the predefined size, called

write aside size, writes are sent to the disk directly to reduce the impact of large writes

consuming a large cache space.

➢ This is useful in an environment where cache resources are constrained and cache is

required for small random I/Os.